A background to Liquid Cooling

Liquid cooling has quietly underpinned high-performance computing since IBM’s water-cooled mainframes of the 1960s, but its role has become central in today’s AI-driven data centres. As rack densities surged past 30kW and GPUs like NVIDIA’s H100 began drawing upwards of 700W per unit, traditional air cooling hit its thermodynamic ceiling. Enter Direct Liquid Cooling (DLC): specifically, water-based single-phase systems using copper coldplates.

Single-phase DLC circulates treated water or water-glycol mixtures through copper coldplates mounted directly on heat-generating components. This method offers high thermal conductivity, low complexity, and compatibility with existing server designs.

Yet even single-phase DLC is nearing its limits. The latest AI accelerators and custom silicon are pushing heat flux beyond what single-phase DLC can reliably dissipate. With GPU junction temperatures rising and facility water temperatures constrained by corrosion and safety margins, thermal throttling is becoming a real issue. The semiconductor industry’s recommended flow rate of 1.5 LPM per kW is increasingly difficult to sustain with single-phase DLC without excessive pump energy or pressure drop.

As compute density climbs and AI workloads intensify, the industry is approaching an inflection point. While single-phase DLC remains the most mature and widely deployed solution, next-generation cooling, especially two-phase DLC, is no longer optional.

IBM's mainframe in the 1960s

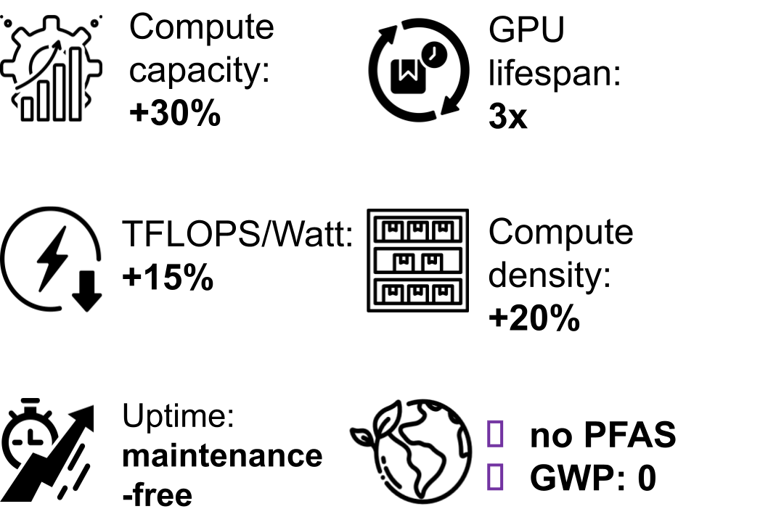

It is an undisputed scientific fact that two-phase cooling is more thermodynamically efficient than single-phase. There has been limited two-phase DLC deployment in data centres due to relatively thick, low performance cold plates, PFAS concerns and operational overhead. Infiniflux innovative cold plate is simultaneously mechanically robust and sufficiently thin, enabling ultra-low thermal resistance of 0.009 K/W. Our high performance PFAS-free refrigerant provides unrivalled cooling density in a maintenance free, passive operation. Our system needs between 5 and 20 times less pump power than conventional DLC systems.

How Infiniflux is changing the game

Key benefits of ChipCool™

For Data Centre operators

Less throttling, so higher revenue per server

Higher rack density, so higher revenue per m²

90% cooling energy reduction (vs. air-cooling)

Cut WUE to near zero

Easy retrofit to facility water loop

Heat reuse potential

Regulatory compliance (GWP, ODP, PFAS)

ESG-aligned (SFDR, TCFD, EU Taxonomy) infrastructure, attracting green bond financing

European supplier

For Server OEMs

Higher value for Data Centre customers

Push up TDP for GPUs

Up to 25% chassis volume reduction

2X longer component life extension

Global deployability

For GPU producers

Extra thermal headroom removes thermal bottlenecks for high-power AI accelerators

10˚C chip temperature drop

Can be used to downsize die (higher yield, lower unit cost)

Can be used to increase chip performance (higher ASP)

Higher warranty confidence

3D cooling

Compatibility

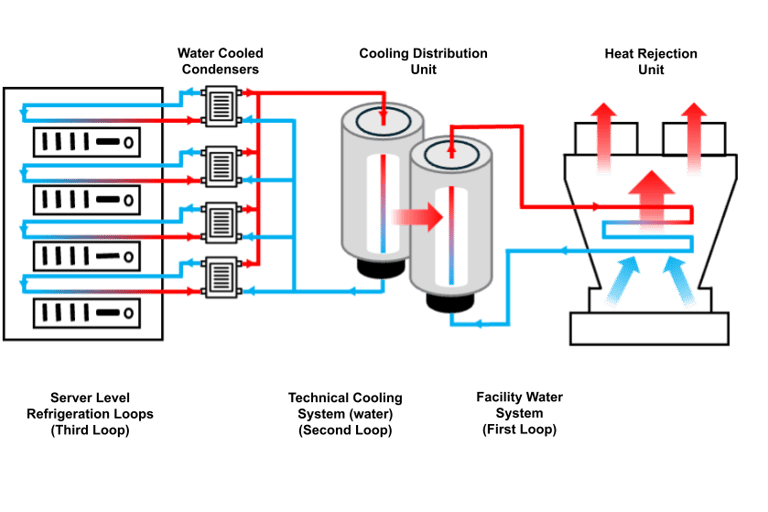

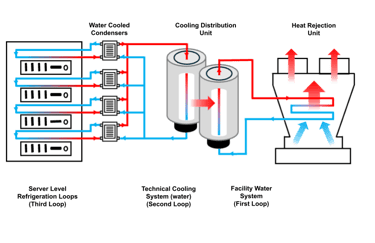

The system architecture ChipCool is different, which makes it highly compatible with existing liquid cooling infrastructure.

The heart of the ChipCool system is what we call the Third Loop. This is the cooling loop at server level, which is hermetically closed. Refrigerant in the Third Loop has no contact with any other liquids in the system, ensuring that no cross-contamination can occur. Furthermore, our proprietary refrigerant doesn’t contain water, removing the risk of bio-fouling.

The ChipCool system is compatible with primary loops and CDUs of a range of manufacturers. This avoids vendor lock-in and facilitates retrofit in existing data centre installations. An additional benefit of the ChipCool system is that the pressure drop that the CDU needs to overcome is about 80% lower than in conventional DLC systems. This produces a huge saving on pump energy.

The Future of AI

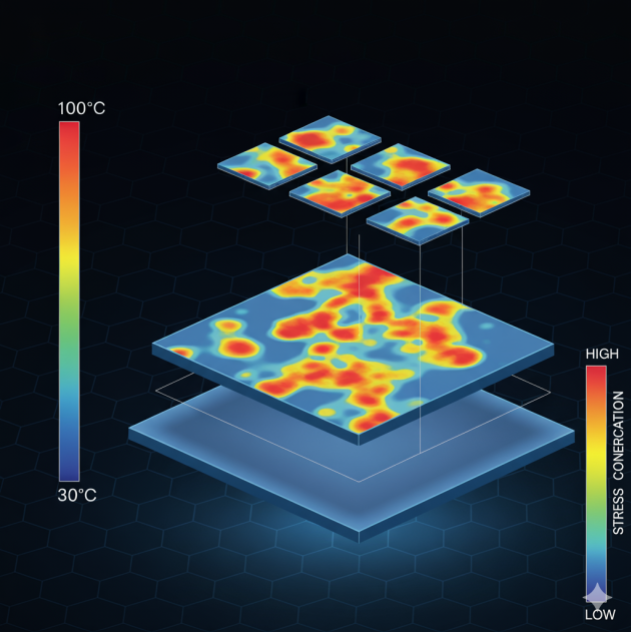

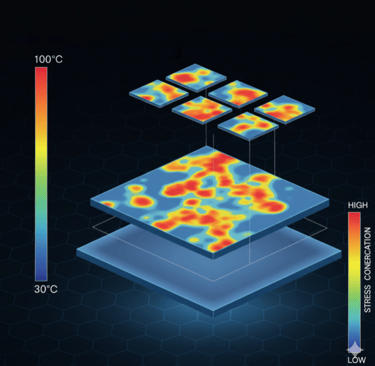

Hotspots in 3D-stacked chips act like tiny, trapped furnaces: steep temperature gradients build huge thermo-mechanical stress inside the stack.

This stress accelerates device aging, thermal runaway and runtime throttling, which all sharply reduces yield. Because heat can't escape vertically, these thermal limits make stacking high-power AI GPU dies unfeasible. 3D chip architecture is the 'holy grail' for AI, as the shorter interconnect distance between logic and memory provides 2x compute. Infiniflux is developing a true cooling breakthrough to unlock safe, high-performance 3D AI stacks.

Contact US

info@infiniflux.cool

© Infiniflux Ltd 2025. All rights reserved.

London GreenCity

Waterfront ARC West Building

3rd floor Manbre Road

London, United Kingdom

W6 9RU

272 Bath Street

Glasgow

Scotland

G2 4JR

Correspondence address